Artificial Intelligence: GPT with Harbour and FWH

Posted: Fri Aug 14, 2020 11:37 am

Past July 2020, the company OpenAI released GPT3 (175 billions parameters) into private beta which has been a tremendous advance on AI natural language processing. GPT3 is the evolution of previous products and research like Microsoft Turing (17 billions parameters), NVidia Megatron (8 billions parameters), GPT2 (1.5 billions parameters), etc. In fact Google has already announced an enhanced new product named GShard that uses up to 600 billions parameters.

GPT stands for "Generative Pre Trained". This means that its work is to "generate" the next word, given some words, and that the system has been trained previously in advance.

Seven years ago Google developed and provided a "word2vec" routines that basically help to "vectorize" words. As an example for this, lets consider "colors". Colors can be represented by 3 numbers (red,green,blue) which could be represented as x,y,z coordinates (vectors) in "space". This way we can add "red" and "blue" and obtain "purple". We can see that this vector representation allows to "sum" colors, find "near" colors, "sustract" them, etc.

word2vec https://code.google.com/archive/p/word2vec/ generates these vectors from a given text, and the results are quite impressive. I have managed to build it on Windows in case you may want to test it.

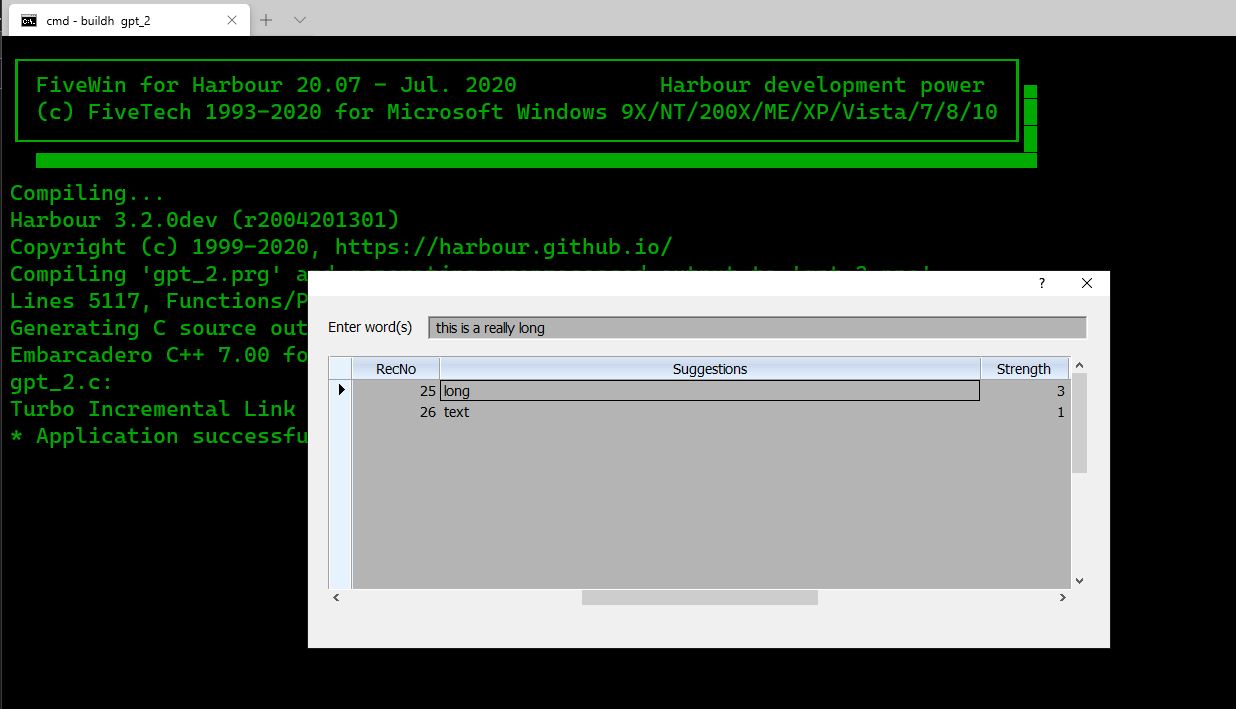

Given those ideas, Rao and I decided to build a simple Harbour and FWH (as the GUI) example to check the basic concepts of a very simple GPT using Harbour. Here you have the code to build it and see how to use it to generate the next word. You can provide your own texts to it. On a next msg we will explain how it works and how you can apply it to your apps already

gpt_2.prg

GPT stands for "Generative Pre Trained". This means that its work is to "generate" the next word, given some words, and that the system has been trained previously in advance.

Seven years ago Google developed and provided a "word2vec" routines that basically help to "vectorize" words. As an example for this, lets consider "colors". Colors can be represented by 3 numbers (red,green,blue) which could be represented as x,y,z coordinates (vectors) in "space". This way we can add "red" and "blue" and obtain "purple". We can see that this vector representation allows to "sum" colors, find "near" colors, "sustract" them, etc.

word2vec https://code.google.com/archive/p/word2vec/ generates these vectors from a given text, and the results are quite impressive. I have managed to build it on Windows in case you may want to test it.

Given those ideas, Rao and I decided to build a simple Harbour and FWH (as the GUI) example to check the basic concepts of a very simple GPT using Harbour. Here you have the code to build it and see how to use it to generate the next word. You can provide your own texts to it. On a next msg we will explain how it works and how you can apply it to your apps already

gpt_2.prg

Code: Select all

#include "FiveWin.ch"

request DBFCDX

field WORDS

static nSize := 3

function Main()

local oFont, oDlg, oBrw, oGet, cScope

local cSearch := Space( 60 )

local aSentences := { "the cat eats mouse","the mouse eats cheese",;

"the cat eats fish","the fish eats fly", "the dog eats meat", ;

"the dog barks at strangers", "the dog bites theives", ;

"this is a really long long text", "this was a stage" }

FErase( "GPT_2.dbf" )

FErase( "GPT_2.cdx" )

if ! File( "GPT_2.dbf" )

DbCreate( "GPT_2.dbf", { { "ID", "+", 10, 0 }, { "WORDS", "C", 30, 0 } }, "DBFCDX" )

USE GPT_2 VIA "DBFCDX"

INDEX ON WORDS TAG GPT_2 CUSTOM

USE

endif

USE GPT_2 VIA "DBFCDX"

SET ORDER TO TAG GPT_2

AEval( aSentences, { |c| RecordSentence( c ) } )

OrdScope( 0, " " )

OrdScope( 1, " " )

GO TOP

SetGetColorFocus()

DEFINE FONT oFont NAME "TAHOMA" SIZE 0,-14

DEFINE DIALOG oDlg SIZE 800, 350 PIXEL TRUEPIXEL FONT oFont

@ 22, 20 SAY "Enter word(s)" SIZE 100, 20 PIXEL OF oDlg

@ 20, 120 GET oGet VAR cSearch SIZE 660,24 PIXEL OF oDlg ;

ON CHANGE ( If( nKey == 32, ResetBrowse( oGet:GetText(), oBrw ), nil ) ) ;

UPDATE

@ 60, 20 XBROWSE oBrw SIZE 760, 250 PIXEL OF oDlg ;

DATASOURCE ReadSuggestions( "" ) ;

COLUMNS 1, 2, 3 ;

HEADERS "RecNo", "Suggestions", "Strength" NOBORDER

WITH OBJECT oBrw

:nStretchCol = 2

:aCols[ 2 ]:bLDClickData := < ||

oGet:cText := PadR( Trim( cSearch ) + " " + Trim( oBrw:aCols[ 2 ]:Value ), 60 )

ResetBrowse( cSearch, oBrw )

return nil

>

:CreateFromCode()

END

ACTIVATE DIALOG oDlg CENTERED

OrdScope( 0, "this " ); OrdScope( 1, "this " )

XBROWSER "GPT_2" COLUMNS "id", "words", "OrdKeyVal()", "OrdKeyCount()"

return nil

function ResetBrowse( cText, oBrw )

local aSuggest := ReadSuggestions( cText )

oBrw:aArrayData := aSuggest

oBrw:GoTop()

oBrw:Refresh()

return nil

function ReadSuggestions( cText )

local aRet := {}

local nTokens, n, nn, cKey

cText := Lower( AllTrim( cText ) )

if Empty( cText )

OrdScope( 0, " " )

OrdScope( 1, " " )

dbGoTop()

DbEVal( { || AAdd( aRet, { RECNO(), WORDS, 1 } ) } )

return aRet

endif

nTokens := NumToken( cText )

SetScope( nil )

if !dbSeek( Token( cText ) )

return aRet

endif

for n := Max( 1, nTokens - nSize + 1 ) to nTokens

cKey := AddTokens( cText, n, nTokens )

nn := NumToken( cKey )

SetScope( cKey + " " )

DbEVal( { || If( AScan( aRet, { |a| a[ 1 ] == RECNO() } ) == 0, AAdd( aRet, { RECNO(), WORDS, nn } ), nil ) }, ;

{ || NumToken( OrdKeyVal() ) == nn + 1 } )

next

return aRet

function SetScope( c )

OrdScope( 0, c ); OrdScope( 1, c ); dbGoTop()

return nil

function RecordSentence( cText )

local nToken, nTokens := NumToken( cText )

local aTokens := Array( nTokens )

local n, cKey

AEval( aTokens, { |u,i| aTokens[ i ] := Token( cText, nil, i ) } )

SET ORDER TO TAG GPT_2

if !dbSeek( " " + aTokens[ 1 ] )

dbAppend()

WORDS := aTokens[ 1 ]

OrdKeyAdd( "GPT_2", nil, " " + Lower( aTokens[ 1 ] ) )

endif

for nToken := 1 to Len( aTokens ) - 1

cKey := Lower( aTokens[ nToken ] + " " + aTokens[ nToken + 1 ] )

if !dbSeek( cKey )

dbAppend()

WORDS := aTokens[ nToken + 1 ]

OrdKeyAdd( "GPT_2", nil, cKey )

if nToken > 1

for n := nToken -1 to Max( 1, nToken - nSize ) step - 1

cKey := Lower( AddTokens( cText, n, nToken + 1 ) )

OrdKeyAdd( "GPT_2", nil, cKey )

next

endif

endif

next

return nil

function LastTokens( cText, nCount )

local nTokens := NumToken( cText )

local n

local nFrom := Max( nTokens - nCount + 1, 1 )

local cRet := Token( cText, , nFrom )

for n := nFrom + 1 to nTokens

cRet += " " + Token( cText, , n )

next

return cRet

function AddTokens( cText, nFrom, nLast )

local n, cRet

DEFAULT nLast := NumToken( cText )

nFrom := Max( 1, nFrom )

cRet := Token( cText, , nFrom )

for n := nFrom + 1 to nLast

cRet += " " + Token( cText, , n )

next

return cRet